Hi,

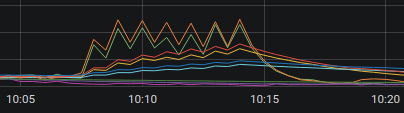

When I add a proxy_requests on a check, this one begins to flap, if I remove proxy_requests check does not flap anymore.

My check looks like that :

---

type: CheckConfig

api_version: core/v2

metadata:

name: clamav-freshclam-process

namespace: prod

annotations:

sensu.io/plugins/slack/config/channel: '#sensu-go-prod'

spec:

command: check-process.rb -p 'freshclam' -u clamav -w 1 -W 1 -c 1 -C 1

interval: 60

publish: true

proxy_requests:

entity_attributes:

- entity.labels.role != 'esdata'

- entity.labels.role != 'esmaster'

handlers:

- slack

runtime_assets:

- sensu-plugins-process-check

- sensu-ruby-runtime

subscriptions:

- system

In agents logs we can see lot of calls when check is triggered with proxy_requests set otherwise this is not the case.

without proxy_requests :

May 25 13:57:52 my-server sensu-agent[98272]: {"component":"agent","level":"info","msg":"scheduling check execution: clamav-freshclam-process","time":"2020-05-25T13:57:52Z"}

May 25 13:57:52 my-server sensu-agent[98272]: {"assets":["sensu-plugins-process-check","sensu-ruby-runtime"],"check":"clamav-freshclam-process","component":"agent","level":"debug","msg":"fetching assets for check","namespace":"prod","time":"2020-05-25T13:57:52Z"}

May 25 13:57:52 my-server sensu-agent[98272]: {"check":"clamav-freshclam-process","component":"agent","entity":"my-server","event_uuid":"f3e93270-b65d-4e87-a979-86b57d8a09c4","level":"info","msg":"sending event to backend","time":"2020-05-25T13:57:52Z"}

with proxy_requests :

logs.json (38.5 KB)

Is it a normal behavior ?

We are running sensu backend 5.20.0 on kubernetes with 3 replicas.

Agents are on Debian Buster on 5.20.1-12427 version.

Thanks